Once you spend even a little time in the world of elite college admissions, you’ll inevitably come across the Holy Grail of prestige: the U.S. News & World Report rankings. People will refer to schools on the Best National Universities list with awe-inspired reverence. Terms like T20 and T30 have become shorthand for the institutions topping the annually-published rankings. But what does it really mean to be one of the 20 schools with T20 status? What makes the University of California, Los Angeles the 20th “best” university and Emory University the 21st?

The short answer: a series of arbitrarily-weighted data points that hold little-to-no bearing on whether School A or School B is the better fit for you. If someone ever tries to tell you that you need to get into a top-ranked school, I want you to send them this article. Let’s examine U.S. News’ methodology to find out how they decide which schools do or don’t land a coveted spot atop the rankings.

To begin, we should note that there isn’t just one U.S. News list. Rather, schools are classified into various categories: National Universities, National Liberal Arts Colleges, Regional Universities and Regional Colleges. This already presents a conundrum: you might have a better experience at, say, a liberal arts college than a major university. For the sake of this article, though, I’m going to focus on the National Universities rankings, the list with the brand name schools (Harvard, Stanford, etc.) that first come to mind when you think of elite colleges.

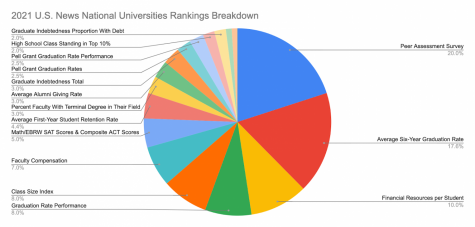

Here is a breakdown of how the rankings are calculated:

There’s a lot happening on this pie chart, so let’s take a closer look at each part of U.S. News’ formula.

Peer Assessment Survey (20% of overall ranking)

U.S. News explains that “each year, top academics — presidents, provosts and deans of admissions — rate the academic quality of peer institutions with which they are familiar on a scale of 1 (marginal) to 5 (distinguished).” In other words, the most renowned college ranker derives its most important metric from asking administrators at other schools what they think about their rivals. Meanwhile, survey results from people currently at said school are not included in the ranking process.

How higher-ups at Yale view Princeton: 20% of Princeton’s rank

How Princeton students and professors view Princeton: 0% of Princeton’s rank

Average Six-Year Graduation Rate (17.6% of overall ranking) and Average First-Year Student Retention Rate (4.4% of overall ranking)

These two values form another 22% of the rankings, which intuitively makes sense: you want students staying at your school and successfully graduating. But consider the reasons why somebody might not finish college — and how little they often have to do with the quality of the school. For example, Washington University in St. Louis has a higher graduation rate than MIT. Is that because WashU is “better” or because MIT has more than six times as many students from bottom-20% income families? One trend you’ll notice throughout the rankings formula is that, according to U.S. News, rich students = better school.

Financial Resources per Student (10% of overall ranking)

I’ll be honest: I don’t know what exactly U.S. News classifies as “instruction, research, student services and related educational expenditures,” but this theoretically seems like the most sensible metric yet.

Class Size Index (8% of overall ranking) and Student-Faculty Ratio (1% of overall rank)

Regarding U.S. News’ class size index, “schools score better the greater their proportions of smaller classes.” Small class sizes and lots of direct student-faculty interaction were very important factors for me when deciding where I wanted to attend, but maybe you want larger lectures and/or a larger student body. Maybe this isn’t important to you at all. This is an example of where your personal preferences may not align with the methodology at hand.

Graduation Rate Performance (8% of overall ranking), Pell Grant Graduation Rate (2.5% of overall ranking) and Pell Grant Graduation Rate Performance (2.5% of overall ranking)

U.S. News compares schools’ actual graduation rates from their predicted ones using a model that accounts for “admissions data, the proportion of undergraduates awarded Pell Grants, school financial resources, the proportion of federal financial aid recipients who are first-generation college students and National Universities’ math and science orientations.”

This provides some semblance of a resolution to my earlier qualms about graduation rate data. However, in the context of an overachiever deciding between elite schools, the extent to which a school did the bare minimum of awarding diplomas probably won’t be a major concern.

Meanwhile, my guess is that even fewer people care about the specific outcomes for Pell Grant recipients, a federal grant for low-income students, when ranking schools. I wish this weren’t the case, and I, for one, am glad that U.S. News “adjusted [this data] to give much more credit to schools with larger Pell student proportions.” If rankings are going to have tangible effects on colleges hoping to outdo their peer institutions, then I’ll absolutely welcome incentivizing schools to make higher education more accessible to those who would benefit the most.

Faculty Compensation (7% of overall ranking), Percentage of Faculty With Terminal Degree in Their Field (3% of overall ranking) and Percentage Faculty That Is Full Time (1% of overall ranking)

Trying to measure professor quality is admittedly a difficult task. If I were creating these rankings, this is a spot where I would incorporate the perspective of current students, but these three metrics are all understandable if you’re insistent on objective numbers. Schools that offer better compensation usually have an easier time attracting the top faculty in their field, while terminal degrees and full-time status are rough proxies for faculty expertise and commitment to their university-related work.

Enrolled Students’ SAT and ACT Scores (5% of overall ranking) and Percentage in the Top Decile of Their High School Class (2% of overall ranking)

An additional 7% of the rankings revolve around enrollees’ standardized test scores and high school class rank. This part of the formula rests on the assumption that a college’s selectivity and quality are intrinsically linked. U.S. News posits that a student body of academic all-stars “enabl[es] instructors to design classes that have great rigor.” I definitely think there is some validity to this idea, and I also understand that being surrounded by ridiculously smart people has the potential to produce more impactful discourse and growth.

But I buried the lede a bit here, because the underlying question is whether high school grades and test scores are indicative of an intellectually stimulating environment. I know idiots at T20s and geniuses at state schools, which is partially a reflection of the SAT/ACT (and college admissions writ large) not being a true meritocracy thanks in large part to race and class inequalities. All of this is to say that I wouldn’t be going to a highly-selective school myself if I didn’t see value in learning alongside gifted peers, but I think U.S. News takes an imperfect route to measuring this.

One other note about test scores: “Schools sometimes fail to report SAT and ACT scores for students in these categories: athletes, international students, minority students, legacies, those admitted by special arrangement and those who started in the summer term.” U.S. News says they respond to this by weighing these schools’ testing data less in their model, but you should be aware that some institutions actively try to spin their numbers.

Graduate Indebtedness Total (3% of overall ranking) and Graduate Indebtedness Proportion With Debt (2% of overall ranking)

How many students graduate with debt and how much debt they graduate with only makes up 5% of a school’s ranking. I imagine whether or not you think 5% is too low says a lot about your financial situation, which underscores a key flaw in “one size fits all” rankings: everyone doesn’t have the same priorities when choosing a college, but U.S. News employs a streamlined formula.

Average Alumni Giving Rate (3% of overall ranking)

To clarify, this isn’t even a measure of a school’s endowment size. Rather, it’s “the average percentage of living alumni with bachelor’s degrees who gave to their school.” U.S. News claims “giving measures student satisfaction and post-graduate engagement,” but I’d argue there are much better bellwethers (surveys, tracking post-graduate outcomes, etc.) than whether or not someone wrote their alma mater a check.

Closing thoughts

That’s it. That’s the entire rankings model. Ask someone who enjoyed their college experience why they enjoyed it so much: I guarantee you most of the things they’ll mention aren’t captured by these rankings. Yet we collectively cling to this formula to tell us which schools are worth aiming for. Consider how many variables are missing, from student wellbeing to academic quality in your areas of interest. Even if you disagree with my overall philosophy on the importance of prestige, I think how we determine prestige is a question that you should engage with.

![Helping a customer, print room assistant Gretchen Williams operates her booth at the West High Craft Fair from Oct. 25-26. This was Williams’ first time participating in the Craft Fair with her new craft shop, Gs Beaded Boutique. “People have always said, over the years, ‘you should open something.’ [I replied that] I would rather just make [my crafts as] gifts for people. I just started [the online store] up, and it's been okay. I'm always surprised [by] how many views I get and [the] people from different states buying things; somebody from Alaska bought something the other day.”](https://pwestpathfinder.com/wp-content/uploads/2025/11/DSC0451-2-1200x799.jpg)

![Between lights, smoke and a captive audience of students, science teacher Joel Anderson conducts his annual Halloween show in class on Oct. 31. Anderson has performed the Halloween show every year for 27 years, since he started teaching in 1999. “[My favorite part about the show] is getting students excited about it and seeing their level of anticipation. After the students see it, they realize that [I] put a lot of time and effort into it,” Anderson said. “They also end up learning because I sneak some chemistry into the [show] as well. So, it’s a whole bunch wrapped up in a 15 or 20-minute package. It’s really scary.”](https://pwestpathfinder.com/wp-content/uploads/2025/11/DSC_9047-Enhanced-NR-1200x798.jpg)

![Smiling in a sea of Longhorns, Fox 2 reporter Ty Hawkins joins junior Darren Young during the morning Oct. 3 pep rally. The last time West was featured in this segment was 2011. “[I hope people see this and think] if you come to [Parkway] West, you will have the time of your life because there are so many fun activities to do that make it feel like you belong here. I was surprised so many people attended, but it was a lot of fun,” Young said.](https://pwestpathfinder.com/wp-content/uploads/2025/10/Edited2-1200x798.jpg)

![Blue lights shining brightly, senior Riley Creely beatboxes into the microphone. Creely and the group began the performance in front of the blue lights, sparking interest from the audience. “The pep rally performance was fun. I got to beatbox for the first half of the song, which was hype. I liked to look into the student section [while I performed],” Creely said.](https://pwestpathfinder.com/wp-content/uploads/2025/09/DSC_5085-Enhanced-NR-1200x799.jpg)

![Hugging senior Ella Wheeler, senior Jamaya Love beams after scoring a touchdown at the Powderpuff football game on Sept. 11, putting the seniors on the scoreboard with a score of 6-2 above the juniors. The seniors went on to capture the victory with a final score of 12-2. “I was actually gassed [at this moment]. I was so tired. But, everyone on the sideline, all my teammates and everybody in the stands were cheering,” Love said.](https://pwestpathfinder.com/wp-content/uploads/2025/09/DSC1735-Enhanced-NR-2-1200x799.jpg)

![Raising her hands in the air, freshman Jillian Sternhagen follows Mr. Mooney’s lead during an activity Aug. 15 at freshman orientation. Surrounded by other freshmen, Sternhagen learned an “A O E day” chant to help remember their new weekly high school schedule. Students participated in several activities to get to know each other, the school and the upperclassmen. “We got to tour the school and learn where everything in our schedule is. The energy [at orientation] was fun; the leaders were peppy and got us excited,” Sternhagen said.](https://pwestpathfinder.com/wp-content/uploads/2025/08/DSC_0145-1200x798.jpg)