Ever wonder who is creating the “For You” page on TikTok, “Home Tweets” on Twitter or “Suggested Reels” on Instagram — all tailored and personalized just for you? The mastermind behind all of these personalized recommendations is one or several artificial intelligence algorithms.

A social media algorithm takes in data from a user’s past viewing history and, based on a set of rules, makes decisions about what the user may want to see next. They are pieces of code that “learn” how to carry out tasks like managing significant bytes of data, filtering through the content, and delivering the most interesting videos and pictures to the user.

Recently, social media algorithms have come under legal scrutiny. Within the last year, several state attorney generals have filed nationwide investigations and allegations against large social media platforms Meta and TikTok. The investigation targets the techniques utilized by Meta, including algorithms to increase the frequency and duration of engagement by young users.

The number of social media app users has grown exponentially over the past ten years. According to Statisa, social media had 4.26 billion users in 2021, which is expected to increase to 6 billion by 2027. As consumers of social media, students were asked about their experiences with social media algorithms and their potential impact on their opinions and lives.

Positive experiences

“When I’m on YouTube, and I watch a funny video, recommendations are nice because then I find another funny video,” junior Michael Emerson said. “[Meanwhile], Instagram algorithms make it easy for me to learn about an NFL player, particularly when I am comparing players for Fantasy Football. When I follow a player, my home feed is filled with these memes, reviews, and videos about him. It feels like somehow Instagram can read my mind.”

From music to homework help, algorithms help users see more of what they are interested in without much effort in scrolling and searching. This in turn, can save time and protect users from information overload — overexposure to information that causes difficulty in understanding and decision-making.

Algorithms process an enormous amount of data at an extremely fast pace. Take, for instance, Facebook, which had 2.934 billion monthly active users in July 2022. For every user, the algorithms consider ranking signals such as age, interactivity with their connections, the content they click on, and their level of engagement. This translates to exponential data being evaluated over fractions of seconds which is impossible to handle manually.

Furthermore, because algorithms are designed to show people content based on their interests, people can easily find like-minded people, support groups, and interest groups. Pew research shows that nearly two-thirds of teens who have made a new friend online say they have met new friends on a social media platform. Friend recommendation algorithms on social networking platforms help people find new friends and to know them better.

“Recommendations for video games have led me [to] find people with similar interests and make new friends on video game platforms with whom I am still good friends and play games,” Emerson said.

Social media can also be leveraged for advocacy, activism, public education and innovation. However, on the business side, algorithms can help customers find more of the products they are looking for and allow businesses to customize their marketing to specific demographics and interests.

“When I search for Lululemon off-brands, the next time I open the browser, I see ads like ‘10 best Lululemon dupes on Amazon,’ ‘Lululemon look-alikes that are better than the real deal’ and many more similar products,” Emerson said. “In the beginning, it felt creepy, as if someone was watching me. But [targeted ads] help me compare prices and find what I want much [more] easily. I could find sellers with discounted prices that I wouldn’t have known or come across if I had searched myself.”

Negative experiences

Although algorithms can make it easier to find content or products, they pose risks in luring people into watching certain content or buying something they may not need. From a social media company’s standpoint, the ultimate goal is to increase views and profits. Hence from a user standpoint, the experiences are not always positive.

“One time, I watched an Instagram reel on a brain teaser, and I started getting a bunch of them. It was fun at first, but there were so many they all started getting annoying,” junior Chris Gray said. “The next morning, I had to wake up to more brainteasers, and eventually, I had to click ‘not interested’ to get them off.”

A phenomenon called Social Media Fatigue (SMF) has been described by researchers as “a set of negative emotional responses to activities on social media platforms, such as tiredness, burnout, exhaustion, frustration, and disinterest toward communication.” Studies suggest that compulsive media use significantly triggers social media fatigue, affecting users’ psychological and mental well-being.

“These algorithms should be limited. It is not direct, but they’re imposing on your free will,” Gray said. “Even though you can always ex out, they know exactly what will catch you by the hook. So in a way, they’re controlling you. Sometimes I have to catch myself, delete certain things or just shut [down] the internet and go outside.”

Psychiatrists at Stanford University warn about the addictive potential and mental health risks of algorithms. Receiving a “like” activates the same dopamine pathways involved in motivation, reward, and addiction that keep a user hooked on to the platform. They further explain that trapping users into endless scrolling loops can lead to social comparison, causing erosion of self-esteem, depressed mood, and decreased life satisfaction. Some try to cope with these feelings by attacking other people’s sense of self, which can lead to cyberbullying. This is one occasion where algorithms have no control over what a user posts nor the legitimacy of that information.

“Social media algorithms can pose many dangers to students who are not aware of how they work. Anytime you interact with mainstream social media, you have to acknowledge that you are being tracked. Every letter you type, link you click, photo you share is being recorded, analyzed and monetized for the company’s benefit,” Parkway’s Chief Information Officer Jason Rooks said.

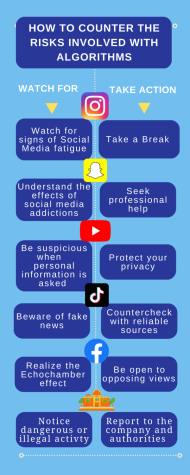

Tackling fake news on social media, particularly when such content goes viral, is also a challenge. The algorithms only present content to users it decides they would most likely be interested in, not filter for whether that content is true. Rooks asks students to be aware of how these algorithms work and take steps to avoid falling prey to some dangers.

“On one occasion, our English teacher made us read something about Nancy Pelosi. The rumor about her husband being gay came up at the top of my recommended feed. If I was stuck on that, I could be misled,” Emerson said. “If I watch Fox News only, or CNN only, and [stay] on one side of the media, then my viewpoint would be framed, often incorrectly.”

The echo chamber effect, also known as “the bubble effect” or “the rabbit hole effect,” results from how social media algorithms group people of similar thoughts, eliminating diversity of opinions and hurting users’ ability to understand differing beliefs. It is believed to be a driving force for increasing political polarization. People unaware of the underlying algorithms may assume their feed to be news instead of content specifically curated for them.

“The most important thing is that we are aware of the dangers as we navigate these digital spaces. Algorithms are written by humans. Humans have bias, and that bias can be written into algorithms. We need to make sure we are looking for that bias and calling it into question,” Rooks said.

Should content be policed?

Although awareness of the potential dangers is the first step, there has been increasing debate on whether social media content should be policed and, if so, who should be responsible for content policing.

“[Social media] companies shouldn’t control content. They could if they like, but that’s restricting information from people,” junior Insang Lee said. “If [companies] do police activity on social media,[items] like age verification [should] be on the policing list. I believe in freedom of speech, but sometimes information can get to kids and young people quickly. There should be some oversight.”

While striking a balance between freedom of speech and safety online is still in the works, the U.S. Supreme Court is set to hear up to three cases in the coming months dealing with legal protections of social media companies in terms of their content.

One such case, Google v. Gonzalez, is aimed specifically at the targeted recommendations feature of the platform. Nohemi Gonzalez was a 23-year-old American student who was killed in a terrorist attack in Paris on Nov. 13, 2015, while studying abroad. The Gonzalez family filed a lawsuit against Google, arguing that the targeted recommendations algorithm of YouTube, owned by Google, recommended ISIS videos to users. The plaintiffs claimed that this facilitated users’ ability to locate terrorist content, thereby assisting terrorist groups in spreading their message.

“I don’t think Google should be held liable for what happened in the Google v. Gonzales case. At the end of the day, it was the choice of the people to incite violence. They decided to be part of ISIS, travel to Paris and take all the other steps to cause violence. This is more a human problem rather than a technology problem,” freshman Zoran Todorovic said.

Google’s support page states its policies on harmful and dangerous content: “YouTube doesn’t allow content that encourages dangerous or illegal activities that risk serious physical harm or death.” Apart from asking viewers to report videos that violate it, the page does not talk about steps they take to enforce this policy.

“YouTube should have been able to remove this content. Yet YouTube still recommended this content to these watchers since its algorithm knew the people who had [terrorist] videos show up [on their recommended pages] would be interested [in them],” Emerson said. “Although it feels like the algorithm is responsible for giving you a recommendation, it is recommending that because you watched something, to begin with. It’s not Google’s fault somebody decided to kill somebody.”

Until further policies, laws and protections are placed, Rooks advises users to be active participants in the clicking and the content they choose to consume.

“Social media companies have an obligation to remove potentially harmful content. Now the issue is how do you define ‘harmful content’? Defining harmful content has been debated since humans started writing,” Rooks said. “Social media companies need to have well-written policies regarding content, have the mechanisms in place to enforce those policies, and be transparent with users regarding their content policies. Then users need to make an educated decision on whether or not to use that platform.”

![Pitching the ball on Apr. 14, senior Henry Wild and his team play against Belleville East. Wild was named scholar athlete of the year by St. Louis Post-Dispatch after maintaining a high cumulative GPA and staying involved with athletics for all of high school. “It’s an amazing honor. I feel very blessed to have the opportunity to represent my school [and] what [it] stands for,” Wild said.](https://pwestpathfinder.com/wp-content/uploads/2025/05/unnamed-6-1200x714.jpg)

![The Glory of Missouri award recipients stand with their certificates after finding out which virtue they were chosen to represent. When discovering their virtues, some recipients were met with contented confirmation, while others, complete surprise. “I was not at all surprised to get Truth. I discussed that with some of the other people who were getting the awards as well, and that came up as something I might get. Being in journalism, [Fellowship of Christian Athletes and] Speech and Debate, there's a culture of really caring about truth as a principle that I've tried to contribute to as well. I was very glad; [Truth] was a great one to get,” senior Will Gonsior said.](https://pwestpathfinder.com/wp-content/uploads/2025/04/Group-Glory-of-Missouri.jpg)

![Standing in Lambert Airport, French students smile for a photo before their flight. On March 10, the group of students began their 12-day trip to France, accompanied by French teacher Blair Hopkins and Spanish teacher Dominique Navarro. “The trip went smoothly. I think everybody enjoyed all three parts of it: Paris, Nice and the family home stay. The feedback I’ve gotten from all [of] the students has been positive, including several people already trying to plan a trip back to France,” Hopkins said.](https://pwestpathfinder.com/wp-content/uploads/2025/04/IMG_8146-1200x900.jpg)

![Freezing in their position, the Addams Family cast hits the “rigor mortis” pose after cast member and senior Jack Mullen, in character as Gomez Addams, calls out the stiff death move. For the past four months, the combined company of cast members, orchestra pit, crew and directors all worked to create the familial chemistry of the show. “I’m excited for [the audience] to see the numbers, the music, the scenes, but I also just love all the technical aspects of it. The whole spectacle, the costumes, makeup and the people that put in the work backstage in order to make the show successful on stage. I’m excited for people to see and appreciate that,” Mullen said.](https://pwestpathfinder.com/wp-content/uploads/2025/03/DSC0116-1200x800.jpg)

![This is the first year that the Parkway West Science Olympiad team competed at regionals. Team member and junior Anish Jindal competed in several events including Chem Labs, Experimental Design and Geological Mapping, earning top five placements in several events. “Compared with some of the other academic competitions at West [that can be] difficult to figure out how to get better, [Science Olympiad] is a lot more inclusive, having a broad group of people with different specialties,” Jindal said.](https://pwestpathfinder.com/wp-content/uploads/2025/03/DSC0391-1200x800.jpg)

![Raising his arm for a high five, freshman Abram Brazier plays with Early Childhood Center students during his first visit of the semester. Brazier joined his assigned class during their designated outdoor time. “[Child Development] gives me experience with how to talk [to] kids. We read them a book so knowing how to talk to them [was helpful]. Most of the [preschoolers] just came right up to us [and] played around,” Brazier said.](https://pwestpathfinder.com/wp-content/uploads/2025/01/gallery2-1200x800.jpg)

Will Gonsior • Jan 10, 2023 at 12:26 pm

Ban social media algorithms. They’re more dangerous to democracy than drugs with more “physical” effects and there’s no other way around them.

Great article, by the way.